It has often been said that in the event of a US default, the

economy would be as bad as in 2008. I don't really understand that much about economics and finance, but from what little I do know, it seems to me that a US default would actually be far, far worse than what we saw in 2008.

In 2008, falling housing prices revealed that the world financial system had configured itself into something of a house of cards: huge institutions had placed leveraged bets on the idea that housing prices would not fall, and other huge institutions had in turn placed leveraged bets on the idea that those other institutions wouldn't lose money on their investments. When the prices did fall, the institutions found that that the "assets" side of their ledgers dropped lower than the "liabilities" side, and all simultaneously began to sell off their assets to reduce their liabilities. But since everyone was selling at the same time, it flooded the market and prices of those assets dropped further--which made everyone's balance sheets look even worse.

The dynamics of a classic financial panic had set in--but the thing that eventually ended it, and prevented the Great Recession from becoming a second Great Depression, was the US federal government--or more accurately, the world's faith in the US federal government to be solvent and responsible. The panic had caused investors to want to move their money to a "safe" investment--and the safest one they could find was US dollars, or US debt. When the US government eventually bailed out the financial system it did so with borrowed dollars that were extraordinarily cheap to borrow--the interest rate was close to zero (in fact if you factored in inflation, the real interest rate was negative). The US being able to borrow large sums of money so cheaply and easily contributed to the confidence of the financial world that the system could be saved, and so in a self-fulfilling prophecy, the system was saved. The downward spiral of assets losing their value and being sold off was interrupted and though the world economy was in terrible recession, at least the financial system was once again sound.

If Congress does not raise the debt ceiling, the US will default on its debt obligations, which means that all those investors who have placed their money in US Treasuries will be stiffed when it comes time for the US to pay the interest on them. The real damage, however, isn't so much that the investors will miss out on a few payments--it's that such an event would shatter confidence in the US Treasury as a safe investment. Everyone will suddenly want to get rid of their US debt, and--just like in 2008--the mass, simultaneous selling off of US debt will cause the value of US debt to plummet, suddenly throwing everyone's balance sheets into the red. Just like in 2008, the revelation that something widely held to be safe and valuable is, in fact, not safe and not valuable, will trigger a panic and a downward spiral of sell-offs and insolvency.

Only this time, if it's the US federal government itself that has triggered the crisis, who then will play the role of the savior and rescue the financial system? When a panic occurs, the only way to stop it is to get a player big enough to interrupt the positive-feedback loop of sell-offs and insolvency. The player needs to be in a position to amass an overwhelming amount of money to do this. By consensus, the financial system had with its loans anointed the US to play this role. But do we know who the backup will be if the US fails? Will it be the EU? Will everyone sell out of their position in US debt and buy German debt instead? Or will there be no bottom to it, like the Great Depression?

The other thing is that it seems to me that however critical those mortgages ended up being to the financial system in 2008, US Treasuries are far more critical. They seem to be the cornerstone of the world financial system: governments back their currencies with US dollars and debt, financial institutions stay solvent with them. I don't understand what would happen if those assets suddenly plummet in value. I'm morbidly curious to find out, but, honestly, I really don't want to live in that world.

I hope the Republicans come to their senses soon...

Monday, October 7, 2013

Sunday, October 6, 2013

Why the Democrats cannot negotiate over the shutdown

It's a simple point: if Democrats make concessions to Republicans on health care, then they will set the precedent that a party that controls just one house of Congress can nullify the duly passed signature legislation of the party in power--and it can do so without making any policy concessions of its own. I don't understand how such a system can be sustainable over time.

Of course it's perfectly legitimate for Republicans to be opposed to Obamacare and to desire its repeal, but the proper method to achieve this goal is to win elections and pass a law. That is the way that Democrats got Obamacare enacted; that is the way that Republicans will have to get Obamacare repealed.

If the Republicans truly believed their own arguments--that Obamacare is a disaster--then they would do well to take steps now to increase the democratic accountability of Congress by striking a deal to abolish or reform the filibuster and return the Senate to majority rule, rather than the current practice of requiring 60 votes to get anything passed. This will make it easier for them to pass a repeal or reform of Obamacare in the coming years.

Of course, it would also make it easier for Democrats to pass legislation that strengthens Obamacare or any one of a number of scary liberal things. But that's how it should be: elections should matter. And as a liberal I would be more than willing to defend Obamacare in a fair fight at the ballot box.

Thursday, October 3, 2013

Principles are a tool to help us moralize

The point of making principle the master of morality rather than, say, intuition or tradition, is that we can guard against our own cognitive frailties. Whereas our intuitive sense of morality is malleable and easily influenced by our own interests, biases, and psychological needs, rule-like objective principles are outside of us and not subject to these pressures. Principles therefore act as an outside point of reference that we can use to navigate the murky swamps of our own minds.

When we find that we support a position that cannot be satisfactorily justified by principles, it is a clue that we have succumbed to one cognitive frailty or another, and that we need to step back and reassess our position. Though we like to think that an unprincipled position is one that is automatically and necessarily wrong, in practice an unprincipled position is one that is merely highly suspect and invites further reflection.

Note that on this view it is not the case that morality is intrinsically rational. Principles--rational thought--is something separate, a tool that helps us moralize by allowing us to overcome various cognitive biases and blindnesses. Metaphorically, principles are a scaffolding we build for ourselves to give us a view on the moral landscape that we otherwise would not be able to attain.

But the use of rational thought to aid our moralizing can fool us into thinking that morality has properties of rational thought that it doesn't necessarily have. We tend to think, for example, that morality provides an answer for every moral question, and that it is merely a function of wisdom that determines if we find that answer or not. We also tend to think that morality is consistent, and that if properly applied it will tell us that a moral proposition is either true or false, but not both.

But I don't think that morality need be consistent, nor does it necessarily provide an answer for every moral question. Moral dilemmas are not difficult problems with a correct solution, like some kind of mathematical postulation, but rather instances where morality cannot yield an answer. In the same way that our limbs are limited in their movement and cannot bend certain ways, our morality is limited in its application and cannot answer certain questions.

If morality is not intrinsically rational--a set of objective rule-like guidelines that determine right and wrong--then it begs the question of what morality is, exactly. I think the best way to look at it is that morality is something humans do that is a part of their essential nature, like how we eat and love and sing. And like those other idiosyncratic activities, morality is irregular and organic and naturally resistant to logical codification and reductive rules. We can try to create such principles and rules so as to reflect our morality as closely as possible, but we can never abandon our morality to the rules--people make moral decisions, not rules.

In the same way that holding a position not supported by principle is a clue that there is something wrong with the position, relying exclusively upon principle to arrive at a decision is a clue that something has gone wrong with one's moralizing capacity. To be doctrinaire is to be in denial of the moral consequences of one's position: it is a retreat, a willing blindness. An exclusively principled stance means nothing if it is not accompanied by charity, empathy, kindness, and true understanding. Principles are a tool, but to apply principles blindly is like using a tool absent some larger goal. It's like hammering nails into a board for no reason.

When we find that we support a position that cannot be satisfactorily justified by principles, it is a clue that we have succumbed to one cognitive frailty or another, and that we need to step back and reassess our position. Though we like to think that an unprincipled position is one that is automatically and necessarily wrong, in practice an unprincipled position is one that is merely highly suspect and invites further reflection.

Note that on this view it is not the case that morality is intrinsically rational. Principles--rational thought--is something separate, a tool that helps us moralize by allowing us to overcome various cognitive biases and blindnesses. Metaphorically, principles are a scaffolding we build for ourselves to give us a view on the moral landscape that we otherwise would not be able to attain.

But the use of rational thought to aid our moralizing can fool us into thinking that morality has properties of rational thought that it doesn't necessarily have. We tend to think, for example, that morality provides an answer for every moral question, and that it is merely a function of wisdom that determines if we find that answer or not. We also tend to think that morality is consistent, and that if properly applied it will tell us that a moral proposition is either true or false, but not both.

But I don't think that morality need be consistent, nor does it necessarily provide an answer for every moral question. Moral dilemmas are not difficult problems with a correct solution, like some kind of mathematical postulation, but rather instances where morality cannot yield an answer. In the same way that our limbs are limited in their movement and cannot bend certain ways, our morality is limited in its application and cannot answer certain questions.

If morality is not intrinsically rational--a set of objective rule-like guidelines that determine right and wrong--then it begs the question of what morality is, exactly. I think the best way to look at it is that morality is something humans do that is a part of their essential nature, like how we eat and love and sing. And like those other idiosyncratic activities, morality is irregular and organic and naturally resistant to logical codification and reductive rules. We can try to create such principles and rules so as to reflect our morality as closely as possible, but we can never abandon our morality to the rules--people make moral decisions, not rules.

In the same way that holding a position not supported by principle is a clue that there is something wrong with the position, relying exclusively upon principle to arrive at a decision is a clue that something has gone wrong with one's moralizing capacity. To be doctrinaire is to be in denial of the moral consequences of one's position: it is a retreat, a willing blindness. An exclusively principled stance means nothing if it is not accompanied by charity, empathy, kindness, and true understanding. Principles are a tool, but to apply principles blindly is like using a tool absent some larger goal. It's like hammering nails into a board for no reason.

Monday, June 10, 2013

Obama on NSA spying: "Was that wrong? Should I not have done that?"

An amusing thing about this NSA story is Barack Obama's response that he "welcomes" a debate about government surveillance. He has also said, "[w]e're going to have to make some choices as a society."

But of course, precisely the problem with the NSA program is that there could be no public debate because the whole thing was steeped in secrecy. The only reason we're having the debate now is because the whole program was leaked by a whistleblower--a whistleblower that Obama will almost certainly prosecute to the fullest extent of the law.

The whole thing reminds me of when George got busted for having sex with the cleaning lady at work.

True grit

For a long time I've had the concern that the primacy of the standardized test in American education, while perhaps doing a good job of identifying the most analytically intelligent, might also have the perverse effect of admitting a certain unfavorable personality type into the nation's elite academic institutions. The personality type I have in mind is that of the sort of person who does well at standardized tests: intelligent and thoughtful, yes, but also obedient, seeking validation from authority figures, and conformist.

What is problematic about this for society is that since elite academic institutions feed our public institutions, there gets to become a culture of groupthink that can lead to disastrous institutional failures. If you have a newsroom culture that systematically defers to authority, then the government can get away with waging a baseless war. If you have an office culture in a financial firm in which dissent is punished or ignored, then the firm will go bankrupt investing in a housing bubble.

What is required to run the world is not just intelligence, but grit--the courage and the will to place one's own principles and dignity above money and status and the rebuke of authority figures. But a person who spends a lifetime dutifully completing school assignments and taking exam prep courses is not likely to have the history of failure, rejection, and hardship that builds character.

So I was not too surprised to see that the leaker of the NSA spying programs, Edward Snowden, is not a graduate of an elite university but has had something of an uneven history:

What is problematic about this for society is that since elite academic institutions feed our public institutions, there gets to become a culture of groupthink that can lead to disastrous institutional failures. If you have a newsroom culture that systematically defers to authority, then the government can get away with waging a baseless war. If you have an office culture in a financial firm in which dissent is punished or ignored, then the firm will go bankrupt investing in a housing bubble.

What is required to run the world is not just intelligence, but grit--the courage and the will to place one's own principles and dignity above money and status and the rebuke of authority figures. But a person who spends a lifetime dutifully completing school assignments and taking exam prep courses is not likely to have the history of failure, rejection, and hardship that builds character.

So I was not too surprised to see that the leaker of the NSA spying programs, Edward Snowden, is not a graduate of an elite university but has had something of an uneven history:

...he never completed his coursework at a community college in Maryland, only later obtaining his GED — an unusually light education for someone who would advance in the intelligence ranks.Even if you disagree with Snowden's actions on the merits, I think everyone can acknowledge that risking his entire life and giving up everything for something he believed in was an act of courage, an act that requires true grit.

Sunday, June 9, 2013

What kind of surveillance state do we want?

|

| A bald eagle on a fucking KEY |

provocatively argues that “[t]he question is not whether we will have a surveillance state in the years to come, but what sort of state we will have.”

The professor distinguishes between authoritarian and democratic surveillance states:

What do authoritarian surveillance states do? They act as “information gluttons and information misers.” As gluttons, they take in as much information as possible....But authoritarian surveillance states also act as misers, preventing any information about themselves from being released. Their actions and the information they gather are kept secret from both the public and the rest of government.

...I think this is a pretty interesting approach to the problem. For example I've long thought about how the de facto decentralized surveillance enabled by the widespread distribution of video-capable phones has been overwhelmingly beneficial for our security--used against not only criminals, as in the Boston Marathon bombings, but also against our own government, as with the countless instances of police brutality that have been captured on video. Expanding in this direction seems to me a way of making a surveillance state compatible with democratic principles.

What would a democratic surveillance state look like? Balkin argues that these states would be “information gourmets and information philanthropists.” A democratic surveillance state would limit the data it collects to the bare minimum.... A democratic surveillance state would also place an emphasis on destroying the data that the government collects.

Critique of authoritarian surveillance

To me the sole advantage to be gained from the authoritarian model of the government keeping what it knows a secret is cases in which bad guys unwittingly divulge information because they don't know they are being spied upon. But to the extent that bad guys are suspicious of the government and careful not to communicate sensitive messages using technological means, that sole advantage is nullified. And meanwhile there are HUGE negatives with this approach, starting with the potential for abuse and blowback that occurs when the abuse is inevitably discovered--not to mention the moral issues of privacy and democratic accountability involved.

In the end, I don't think that waging a secret spy campaign is something that makes sense for America to do; it is simply not the kind of fight that suits the nation, its goals, and its ideals. An analogy might be made here between checkers and chess. In checkers, the goal is to destroy the opponent's pieces; in chess, it is to trap the opponent's king. With a "daylight" model--or democratic surveillance state--where the US makes the extent of its spying publicly known and accountable, the enemy would know exactly the capabilities of US spying and would therefore avoid certain kinds of communication. But while this would make them difficult to apprehend, it would also significantly inhibit their ability to wage terrorism. So though we may not be able to eliminate them from the board, we could still "trap" them into a limited space, capabilities-wise.

Rather than making the primary goal eliminating terrorists, we would be focusing on making terrorism hard to do. And we would get to keep the legitimacy of our government in the bargain.

Monday, April 15, 2013

Risking it all for a souvenir

|

In both books, a strange behavior of the embattled soldier is described: he will undergo extraordinary risks to his personal safety for the seemingly trivial reason of nabbing a "souvenir" from the battlefield.

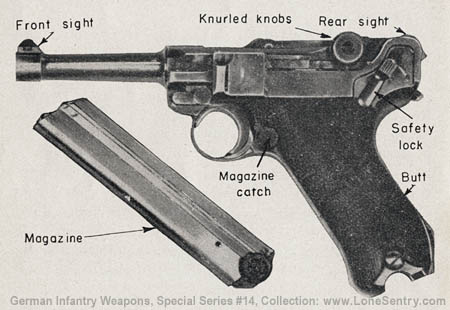

In Band of Brothers--which is a nonfictional account--soldier Donald Malarkey suddenly bounds out from behind his cover during an assault on a German position, because he thinks he can see a Luger on the body of a dead German soldier. He runs out to the body, finds that it is not a Luger after all, and scurries back to his position unharmed. The only reason he is not shot dead is because the Germans assumed he was a medic.

In All Quiet on the Western Front, soldiers risk their lives scavenging No Man's Land for silken parachutes to send home to their wives and girlfriends as sewing material:

The parachutes are turned to more practical uses....Kropp and I use them as handkerchiefs. The others send them home. If the women could see at what risk these bits of rag are often obtained, they would be horrified.Ruminating on this, Stephen Ambrose quotes Glen Gray, a war veteran (and, later, philosopher at Colorado College), who speculates that

[p]rimarily, souvenirs appeared to give the soldier some assurance of this future beyond the destructive environment of the present. They represented a promise that he might survive.I suppose that is one plausible theory. To me, though, it seems that at least part of the explanation must have something to do with the fact that soldiers in those circumstances have trained themselves to disregard risks to their own personal safety in general. Being in denial about the extreme physical danger of a battlefield makes it possible to charge headlong into machine gun fire to assault the enemy, but perhaps a side effect of this denial is that a soldier will also take extreme risks for lesser, even trivial goals.

In any case, though, this is a very surprising and interesting behavior exhibited by soldiers in battle.

Thursday, February 14, 2013

Let's end the music industry's monopoly powers

Here is a problem that capitalism presents us with: if there is something that people want that requires scarce resources to produce, then we can apply the various tenants of capitalism to devise a system that efficiently produces that thing and satisfies demands. However, the creation of this system invariably leads to the establishment of various institutions and interests that depend on it, and who strive to exploit and perpetuate the system for their own gain. But if technology advances, or some other progressive change happens, that obviates the need for the system--if, for example, technology renders the product virtually costless to produce--then there arises a conflict between what is best for society (dismantling the system or replacing it with a different one) and what is best for the incumbent institutions and special interests (taking measures to perpetuate or reinforce the system).

A very concrete example of this is the impact of the internet and personal computing on the music industry. Before these technologies, the recording and distribution of music was a costly process: it required special studio equipment, playing time on limited radio bandwidths, and the pressing of vinyl records (or later, cassettes, then CDs). And so a system needed to be devised such that the costs of making and distributing music could be recouped by the producers. This was accomplished by granting the authors a monopoly on the distribution of music: with monopolistic pricing power, the authors could recoup the costs of recording, marketing, and distribution.

This system worked well initially because technological constraints happened to enable enforcement of the monopoly power of the authors: it was infeasible to copy vinyl records, and so people were forced to buy them from record stores. Later with the emergence of cassete tapes copying was easier but the quality degraded with each copy. Later still, CD's enabled perfect copying fidelity, however a person was still limited to copying the CDs that friends happened to own. Violations of the author's--or content owner's--monopoly of course occurred, but not to the extent that the recording industry could not thrive.

But this changed with the emergence of the interent, which now enabled the virtually cost-free distribution of monopoly-protected music to anyone, anywhere. There was no longer a technological constraint that kept people going to the record store as their source of music. And as internet use became adapted by more and more of society, the record industry's revenues plummeted.

What had happened, though, was that the original economic rationale for the music industry had been obviated: before the internet and affordable, high quality recording equipment/software, recording and distributing music was very costly, and required scarce resources. But post internet, anyone with an okay computer and an internet connection could produce, and distribute for free, studio-quality music. The costs had been so dramatically lowered that it no longer made sense to perpetuate the legal regime (the granting of monopoly powers) that the music industry is predicated on.

Of course, by the time this technological revolution had occurred, the special interests and institutions collectively referred to as "the music industry" had been firmly entrenched. And so rather than dropping away and being replaced with a new, more fitting system, it fought aggressively to take extreme steps to perpetuate itself. It asked for extreme powers to infiltrate and compromise computing systems to prevent the duplication of music files. It asked that extreme penalties be enforced to deter duplication and distribution of music files. It publicly campaigns that unauthorized duplication and distribution of music files is an immoral act, tantamount to stealing physical objects from a store.

These policies, arguments, and actions should be rejected because technology has advanced to a state where the system they are meant to preserve is no longer relevant. Granting monopoly powers--and, therefore, monopoly pricing power--to large companies for the production of an incredibly low-cost product is unnecessary and anticapitalistic, and stifles both the production of creative arts and their consumption. The music industry, once spawned as a means of recouping the costs of music production and distribution, now serves no societal purpose. It is a rentier, parasitic entity.

One alternative to the current system would be simply to abolish the granting of monopoly or any other exclusive powers to the authors of content. The signature benefit of such a system would be its simplicity and the fact that it would not require any invasive laws to enforce: people would be free to create and exchange bits as they saw fit. However, there would also be significant drawbacks to such a system. For one, there could arise a problem of truth-in-authorship: even if no monopoly powers were at stake, artists would not want someone else falsely claiming authorship of a piece that they created. The artist would want artistic credit and public praise for his or her works. So some legal framework may be required to give a plagierized artist legal recourse to claim ownership of the work, even if just for non-monetary reasons. Moreover, there is the issue of compensating artists for their work, under the rationale that they deserve to be materially compensated for worthy works of art, so that they may continue to contribute such a valuable thing to society. Some schemes are compatible with a free model that could result in ample revenue for an artist: for example, an artist can have a Kickstarter-like scheme where the next work of art will not be produced/distributed until enough money is raised to recoup costs and provide a living.

However, such schemes are not a realistic source of revenue for most artists, especially ones who are not already established with their own following. Such a scheme can work for Radiohead or Louis CK; but an unknown or marginally popular musician is unlikely to be able to raise any money this way. A better system is required.

One such system could be this: society decides in advance, in terms of GDP say, how much material resources it wants to divert to the musical arts. This chunk of money represents the pie from which all artists will receive a slice. The size of the slice for each artist can be determined in a number of different ways. One way could be the allocation of government grants by an esteemed panel who decides who is worthy of compensation, and how much. Another, perhaps more democratic scheme could be the printing of virtual money that all citizens can then use to "buy" works of art, with this money then being translated to a percentage of the overall arts pie. Each citizen would be granted an equal amount of virtual money at the beginning of every year. Unused virtual arts money would be voided, increasing the "purchasing power" of the rest of the virtual money actually in circulation. In this way, the selection mechanism of a free market would be replicated, though the total "revenue" for the "arts industry" would have been a fixed amount previously agreed upon my democratic fiat.

Some economic shenanigans could arise from such a system: for example, a market would naturally arise in the buying and selling of virtual arts money. However, it is difficult to see what harm there would be in this. Moreover, steps could be taken to hinder such a market, such as not enforcing contracts involving the sale of virtual arts money, and making the arts money non-transferrable by disabling this ability in its technical implementation.

What do you think?

A very concrete example of this is the impact of the internet and personal computing on the music industry. Before these technologies, the recording and distribution of music was a costly process: it required special studio equipment, playing time on limited radio bandwidths, and the pressing of vinyl records (or later, cassettes, then CDs). And so a system needed to be devised such that the costs of making and distributing music could be recouped by the producers. This was accomplished by granting the authors a monopoly on the distribution of music: with monopolistic pricing power, the authors could recoup the costs of recording, marketing, and distribution.

This system worked well initially because technological constraints happened to enable enforcement of the monopoly power of the authors: it was infeasible to copy vinyl records, and so people were forced to buy them from record stores. Later with the emergence of cassete tapes copying was easier but the quality degraded with each copy. Later still, CD's enabled perfect copying fidelity, however a person was still limited to copying the CDs that friends happened to own. Violations of the author's--or content owner's--monopoly of course occurred, but not to the extent that the recording industry could not thrive.

But this changed with the emergence of the interent, which now enabled the virtually cost-free distribution of monopoly-protected music to anyone, anywhere. There was no longer a technological constraint that kept people going to the record store as their source of music. And as internet use became adapted by more and more of society, the record industry's revenues plummeted.

What had happened, though, was that the original economic rationale for the music industry had been obviated: before the internet and affordable, high quality recording equipment/software, recording and distributing music was very costly, and required scarce resources. But post internet, anyone with an okay computer and an internet connection could produce, and distribute for free, studio-quality music. The costs had been so dramatically lowered that it no longer made sense to perpetuate the legal regime (the granting of monopoly powers) that the music industry is predicated on.

Of course, by the time this technological revolution had occurred, the special interests and institutions collectively referred to as "the music industry" had been firmly entrenched. And so rather than dropping away and being replaced with a new, more fitting system, it fought aggressively to take extreme steps to perpetuate itself. It asked for extreme powers to infiltrate and compromise computing systems to prevent the duplication of music files. It asked that extreme penalties be enforced to deter duplication and distribution of music files. It publicly campaigns that unauthorized duplication and distribution of music files is an immoral act, tantamount to stealing physical objects from a store.

These policies, arguments, and actions should be rejected because technology has advanced to a state where the system they are meant to preserve is no longer relevant. Granting monopoly powers--and, therefore, monopoly pricing power--to large companies for the production of an incredibly low-cost product is unnecessary and anticapitalistic, and stifles both the production of creative arts and their consumption. The music industry, once spawned as a means of recouping the costs of music production and distribution, now serves no societal purpose. It is a rentier, parasitic entity.

One alternative to the current system would be simply to abolish the granting of monopoly or any other exclusive powers to the authors of content. The signature benefit of such a system would be its simplicity and the fact that it would not require any invasive laws to enforce: people would be free to create and exchange bits as they saw fit. However, there would also be significant drawbacks to such a system. For one, there could arise a problem of truth-in-authorship: even if no monopoly powers were at stake, artists would not want someone else falsely claiming authorship of a piece that they created. The artist would want artistic credit and public praise for his or her works. So some legal framework may be required to give a plagierized artist legal recourse to claim ownership of the work, even if just for non-monetary reasons. Moreover, there is the issue of compensating artists for their work, under the rationale that they deserve to be materially compensated for worthy works of art, so that they may continue to contribute such a valuable thing to society. Some schemes are compatible with a free model that could result in ample revenue for an artist: for example, an artist can have a Kickstarter-like scheme where the next work of art will not be produced/distributed until enough money is raised to recoup costs and provide a living.

However, such schemes are not a realistic source of revenue for most artists, especially ones who are not already established with their own following. Such a scheme can work for Radiohead or Louis CK; but an unknown or marginally popular musician is unlikely to be able to raise any money this way. A better system is required.

One such system could be this: society decides in advance, in terms of GDP say, how much material resources it wants to divert to the musical arts. This chunk of money represents the pie from which all artists will receive a slice. The size of the slice for each artist can be determined in a number of different ways. One way could be the allocation of government grants by an esteemed panel who decides who is worthy of compensation, and how much. Another, perhaps more democratic scheme could be the printing of virtual money that all citizens can then use to "buy" works of art, with this money then being translated to a percentage of the overall arts pie. Each citizen would be granted an equal amount of virtual money at the beginning of every year. Unused virtual arts money would be voided, increasing the "purchasing power" of the rest of the virtual money actually in circulation. In this way, the selection mechanism of a free market would be replicated, though the total "revenue" for the "arts industry" would have been a fixed amount previously agreed upon my democratic fiat.

Some economic shenanigans could arise from such a system: for example, a market would naturally arise in the buying and selling of virtual arts money. However, it is difficult to see what harm there would be in this. Moreover, steps could be taken to hinder such a market, such as not enforcing contracts involving the sale of virtual arts money, and making the arts money non-transferrable by disabling this ability in its technical implementation.

What do you think?

Subscribe to:

Posts (Atom)